It takes roughly one minute. That’s how fast One-2-3-45++, an innovative artificial intelligence (AI) method for 3D object generation, can create a high-fidelity 3D object from a single RGB image.

UC San Diego’s Hao Su, an associate professor in the Department of Computer Science and Engineering (CSE), along with researchers from UC San Diego, Zhejiang University, Tsinghua University, UCLA, and Stanford University have proposed One-2-3-45++ in a new research paper, hoping to overcome the shortcomings of its predecessor, One-2-3-45.

The work will be presented at the CVPR 2024 conference, taking place in Seattle on June 17.

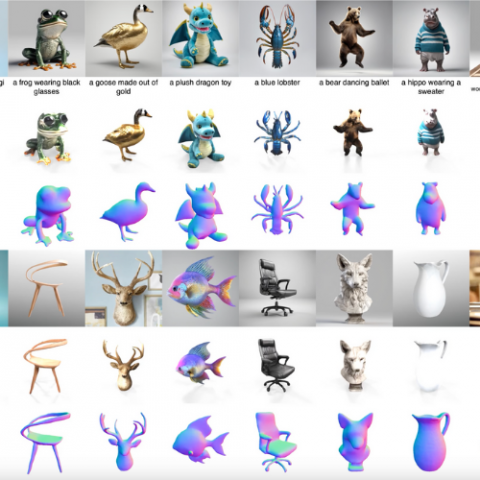

The method for the ++ version initially produces consistent multi-view images by fine-tuning a 2D diffusion model. These images are then elevated into 3D through a pair of 3D native diffusion networks.

Extensive experimental evaluations of the new approach suggest that it can provide rapid generation speeds and simultaneously produce high-quality, diverse 3D assets that closely mirror the original input image. These results suggest that One-2-3-45++ could significantly boost the efficiency and creativity of 3D game artists.

Advances in 3D object generation are just the latest of Su’s innovations at the intersection of AI and visual computing. Su – who is affiliated with the university’s Center for Visual Computing, Contextual Robotics Institute, and the Artificial Intelligence Group, among others – is exploring the loop between perception, cognition and task performance through Embodied AI and 3D vision.

Su’s pioneering research leverages 3D point cloud data to build robotic systems capable of learning from interaction experiences. For humans, the ability to translate visual perception into a planned motor task begins at birth and continues to develop through exposure to developmentally appropriate activities. In robotics, replicating these human-like visuomotor skills has remained an elusive goal.

Su is leveraging AI to help robots develop the same robust and adaptable visuomotor skills as humans. Toward this objective, he is building a large-scale simulation environment where robotic systems can be trained to see, reason and interact with complex environments before deploying them in the real world.

Advances in this area could have new applications and transformative impacts across many domains, from manufacturing and civil engineering to agriculture and health care as well as explorations in space and underwater.

Su recently earned the National Science Foundation’s Faculty Early Career Development (CAREER) award to support ongoing research, which is based on his foundational work in 3D deep learning and in building backbone architectures for point cloud learning. Read more.

--By Kimberley Clementi