Smart phones have turned Thomas Guides into relics. Navigating by virtual landmarks has become an immersive experience. Novices are creating lifelike cinematic content from photos. And an entire metaverse has welcomed users to shop, work, play and hang out.

The breakthrough in 3D representation came in 2020. That’s when Ravi Ramamoorthi, a professor in UC San Diego’s Department of Computer Science and Engineering (CSE) and director of the Center for Visual Computing (VisComp), and collaborators introduced Neural Radiance Fields (NeRF) at the European Conference on Computer Vision.

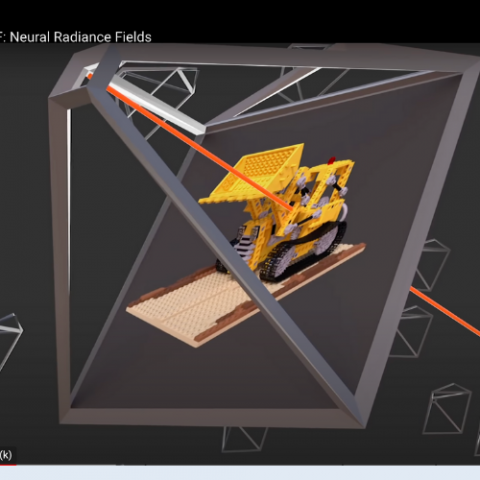

NeRF represents a 3D scene as a continuous volume – a distinctive departure from conventional geometric representations like meshes or disparity maps. By querying a neural network known as a multi-layer perceptron (MLP), NeRFs enable volumetric parameters like view-dependent color and volume density. This allows users to create immersive renderings and compelling fly-throughs from a few source images. Watch a video on NeRFs here.

Google Maps, Google Street View, Luma AI and countless other technologies and apps across computer graphics have made NeRF the representation of choice for problems in view synthesis and image-based rendering. There are also industrial applications and uses in metrology and astronomy.

Ramamoorthi received a Frontiers of Science Award in 2023 for his work in NeRFs. Recently Ramamoorthi and researchers presented an extension to real-time radiance fields for portraits at SIGGRAPH 23 that can enable view synthesis in real-time from a single portrait image captured with a conventional cell phone camera.

--By Kimberley Clementi